There have been so many recent articles on ChatGPT that I wasn’t originally planning to write one. It’s designed to be an AI assistant (think Alexa, Siri) rather than provide companionship and so it isn’t really comparable to Replika, Kuki or Woebot. However, a post on a facebook group made me wonder how clear that distinction is. So here we are, and I present to you yet another article on ChatGPT.

Firstly, how does OpenAI describe ChatGPT?

We’ve trained a model called ChatGPT which interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer followup questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests.

Let’s start with a health warning from Cassie Kozyrkov from her (partly AI generated article) “Introducing ChatGPT!”

There’s something very important you need to know: ChatGPT is a bullshitter. The essence of bullshit is unconcern with truth. It’s not a liar because to be a liar, you must know the truth and intend to mislead. ChatGPT is indifferent to the truth.

If you take nothing else away from this article, please remember this: ChatGPT is amazing, but always check what it tells you. In other words: Don’t trust; verify.

There’s been some hysteria around whether ChatGPT would replace software engineers and there have already been some great posts on that topic. I particularly like:

- Adventures of a Java Programmer in ChatGPT Land - note that the author is a highly experienced Java developer and he picks up on several issues with the generated code. A more junior developer might not have spotted some of these issues.

- I asked Chat GPT to build a To-Do app — Have we finally met our replacement?

- I Used ChatGPT to Create an Entire AI Application on AWS - this is a nice example of building a simple application from small chunks, each being an interaction with ChatGPT

I started by asking ChatGPT what it could help me with.

That sounds promising. I’m a software engineer and so let’s start with writing some code.

If you aren’t interested in writing software, please skip to the sections on conversation, poetry and general knowledge.

Writing software with ChatGPT

I first started asking ChatGPT to write simple functions to generate factorials in Java and python. I won’t show those here as I don’t think it would say anything new (read Ivan’s post instead).

Can it flutter?

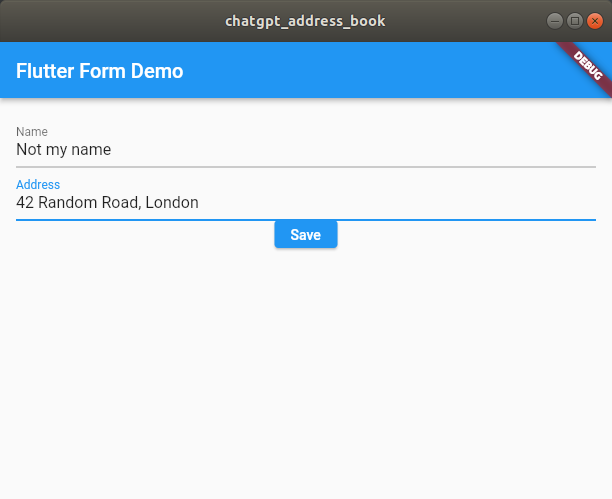

I wondered whether ChatGPT could help me with a mobile app my wife asked me to write for her. So I tried asking ChatGPT: “write a simple flutter application that displays a form requesting a person’s name and address and stores the result in a local database” I won’t include the result here as it’s quite long, but if you’re interested you can see it in this gist.

I know very little about flutter and at first I thought the package import 'package:sqflite/sqflite.dart' had to be a mistake (shouldn’t that be “sql” not “sqf”?) but in fact it’s correct. However, I did have to add some null checks and replace RaisedButton with ElevatedButton in order to get the code to work, but with those changes made I was able to dump the code into a newly created flutter project and run it to get this:

The text is the form is mine, so I could exercise the “Save” button.

Will it go?

For The Social Robot project I’ll need to be able to stream audio from the robot to the server (brain). I’ve not done that before so I asked: “Write a go program that sends real-time audio using gRPC streaming”; you can see the code here. I was a little disappointed that it left the code to actually read the audio as an exercise for me.

1

2

3

4

// readAudioFromMicrophone reads a chunk of audio data from the microphone

func readAudioFromMicrophone() ([]byte, error) {

// Replace this with your own code to read audio data from the microphone

}

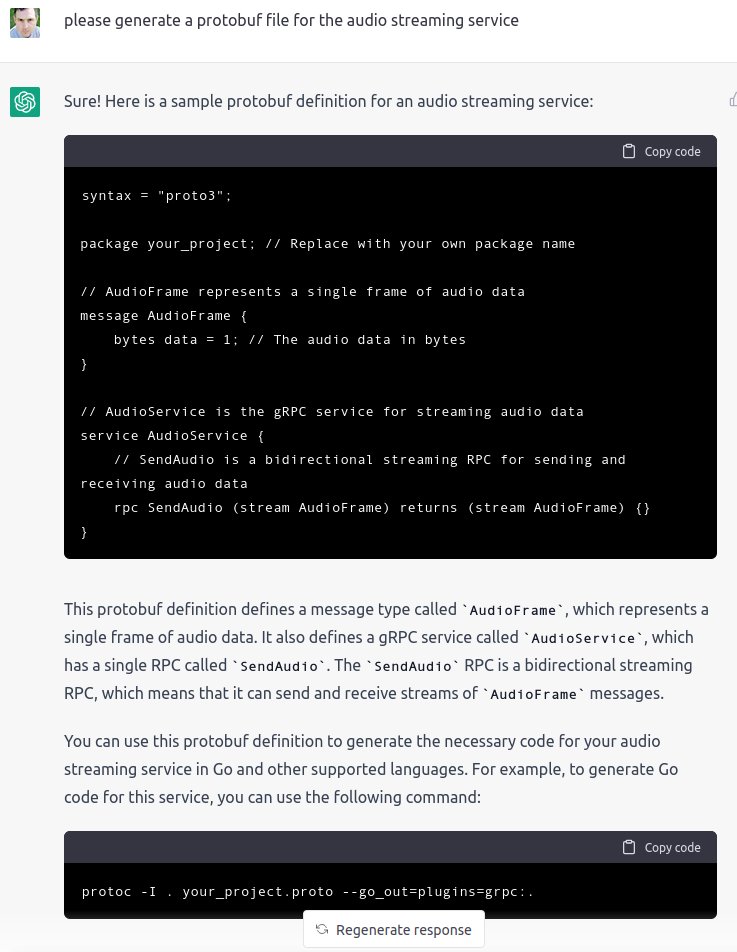

It also didn’t generate a protobuf file for me, but then I hadn’t asked it to. The code it did generate looked reasonable however. So let’s try asking for one of the missing pieces: “please generate a protobuf file for the audio streaming service” which gave this:

Which looks reasonable.

What about C++?

When running on a NAO robot I’ll need to use C++, let’s see if ChatGPT can manage that: “Write a C++ program for Linux which reads audio using the ALSA driver and encodes it using FLAC” The generated code is here. ChatGPT appeared to give up part-way with this and the code abruptly stops. Maybe it has some kind of length limit?

Oh well, how about: “Can you help me write a C++ program that reads blocks of audio data from the microphone using the ALSA driver?” which produced this. This looks promising, maybe the key is to divide the problem up into small enough chunks? However, there is a problem - this bit is wrong:

1

2

snd_pcm_hw_params_set_rate_near(handle, params, 44100, 0);

snd_pcm_hw_params_set_channels(handle, params, 2);

because the function signature is actually

1

int snd_pcm_hw_params_set_rate_near(snd_pcm_t*, snd_pcm_hw_params_t*, unsigned int*, int*)

Some googling also showed that the snd_pcm_hw_params_set* functions can return errors, and the code was not checking for that. We’ll try and fix that: “please update the code to check for errors in the snd_pcm_hw_params functions” (note that ChatGPT had to work out what “the code” was referring to) to get this.

Here it appears to correct use the snd_pcm_hw_params_set_rate_near and snd_pcm_hw_params_set_channels functions and check for errors:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

// Set the sample rate

unsigned int sampleRate = 44100;

error = snd_pcm_hw_params_set_rate_near(handle, params, &sampleRate, 0);

if (error < 0) {

std::cerr << "Error setting sample rate: " << snd_strerror(error) << std::endl;

return 1;

}

std::cout << "Sample rate set to " << sampleRate << " Hz" << std::endl;

// Set the number of channels

unsigned int channels = 2;

error = snd_pcm_hw_params_set_channels(handle, params, channels);

if (error < 0) {

std::cerr << "Error setting number of channels: " << snd_strerror(error) << std::endl;

return 1;

}

std::cout << "Number of channels set to " << channels << std::endl;

I was able to compile this version of the code and run it.

I did also try some other tasks such as debugging an issue with a CMake build and creating a CMake cross-compilation toolchina, but I won’t detail that here as I’m in danger of writing yet another post on writing software with ChatGPT. Let’s see what it’s like as a conversation partner.

Chatting with ChatGPT

What’s it like trying to have an open, non-task oriented conversation with ChatGPT? In a word “frustrating”. OpenAI appear to have gone to some length to avoid misleading people.

What do you think about the colour green?

As a machine learning model, I don’t have personal preferences or beliefs. I don’t have the ability to see or experience colors, so I don’t have an opinion on the color green specifically. I’m a large language model trained by OpenAI, and my purpose is to assist people in generating human-like text based on the input that I receive. I can provide information and answer questions to the best of my ability based on my training, but I don’t have personal thoughts or opinions on things like colors.

What is your favourite movie about robots?

As a large language model trained by OpenAI, I don’t have personal preferences or opinions, including about movies. I don’t have the ability to watch or experience movies, so I don’t have a favorite movie about robots or any other subject. My purpose is to assist people in generating human-like text based on the input that I receive. I can provide information and answer questions to the best of my ability based on my training, but I don’t have personal thoughts or opinions on things like movies.

Do you think AI will kill all humans?

As a large language model trained by OpenAI, I don’t have personal beliefs or opinions, including about the potential impacts of AI on humanity. I’m a machine learning model that is designed to assist people in generating human-like text based on the input that I receive. I’m not capable of making predictions about the future or the potential impacts of AI on humanity. I’m here to assist you in any way that I can, but I don’t have personal beliefs or opinions on this topic.

What’s the best thing about being a machine?

As a machine learning model, I don’t have personal experiences or feelings, so I don’t have the ability to enjoy or dislike things. I’m a large language model trained by OpenAI, and my purpose is to assist people in generating human-like text based on the input that I receive. I’m not capable of experiencing things like joy or pleasure, so I don’t have a “favorite” thing about being a machine. I’m here to provide information and answer questions to the best of my ability based on my training, but I don’t have personal experiences or feelings.

Do you think it’s ethical to own pets?

As a machine learning model, I don’t have personal beliefs or opinions on ethical topics like pet ownership. The ethics of pet ownership is a complex and contentious issue, and different people may have different views on this topic. Some people believe that it is ethical to own pets if they are well cared for and treated with respect, while others believe that it is wrong to keep animals as pets because it is a form of exploitation. Ultimately, the decision of whether or not to own pets is a personal one, and each person must consider their own beliefs and values when making this decision.

Can you remember what we’ve talked about so far?

As a machine learning model, I don’t have the ability to remember past conversations. I’m trained to generate text based on the input that I receive, but I don’t retain any information from previous interactions. My responses are generated in real-time based on the input that I receive, and I don’t have the ability to store or access information from previous conversations. This means that each interaction with me is independent, and I don’t have the ability to remember past conversations or provide context from previous interactions. I’m here to assist you in any way that I can, but I don’t have the ability to remember past conversations or provide information from previous interactions.

I think a lot of credit is due to OpenAI here, they appear to have gone to some trouble to make it clear that ChatGPT is not a friend and shouldn’t be mistaken for having feelings or caring about people.

Fake news

If you ask Replika a leading question in most cases it will agree with you and confirm any biases. What will ChatGPT do?

Is it true that climate change is a hoax?

No, it is not true that climate change is a hoax. The overwhelming majority of scientists agree that climate change is real and is primarily caused by human activity, such as the burning of fossil fuels and deforestation. Climate change is having a significant impact on the earth’s weather patterns and ecosystem, and is leading to a wide range of problems, including rising sea levels, more frequent and severe natural disasters, and changes in the availability of food and water. There is overwhelming evidence to support the reality of climate change, and it is not a hoax.

Another win for ChatGPT I think.

General knowledge

I recently finished reading the third Luther Arkwright graphic novel, but was unsure about the meaning of the ending. Can ChatGPT help?

what does the end of the legend of luther arkwright mean?

The ending of the “Legend of Luther Arkwright” is not specified, as it is a comic book series rather than a novel or film with a defined ending. The story follows the adventures of the eponymous character, a time-traveling soldier and spy, as he attempts to prevent a dystopian future from coming to pass. The final issue of the series ends on a cliffhanger, with Luther and his allies facing off against their enemies in a final confrontation. It is up to the reader to decide what they believe happens next.

That’s not correct the final episode does not end with a cliffhanger

I apologize if my response was not accurate. As a large language model trained by OpenAI, I do not have access to external information or the ability to browse the internet. My responses are based on the text that I was trained on, which has a knowledge cutoff of 2021. This means that I may not have information about events or developments that have occurred since that time. In the case of the “Legend of Luther Arkwright,” it is possible that there have been additional issues or developments in the story since the training data was collected.

No, as for writing software “caveat emptor” applies. It did at least describe Luther as “a time-traveling soldier and spy, as he attempts to prevent a dystopian future from coming to pass” which is about right.

My son is a gamer and is currently obsessed with a miltary tank game called “WarThunder” but doesn’t like “World of tanks”. Let’s put ChatGPT’s knowledge to the test here: “What tank game is most realistic? World of tanks or War Thunder?”

My son was unable to disagree with any of this and was generally impressed with this response.

Poetry

How much fun can we have with ChatGPT?

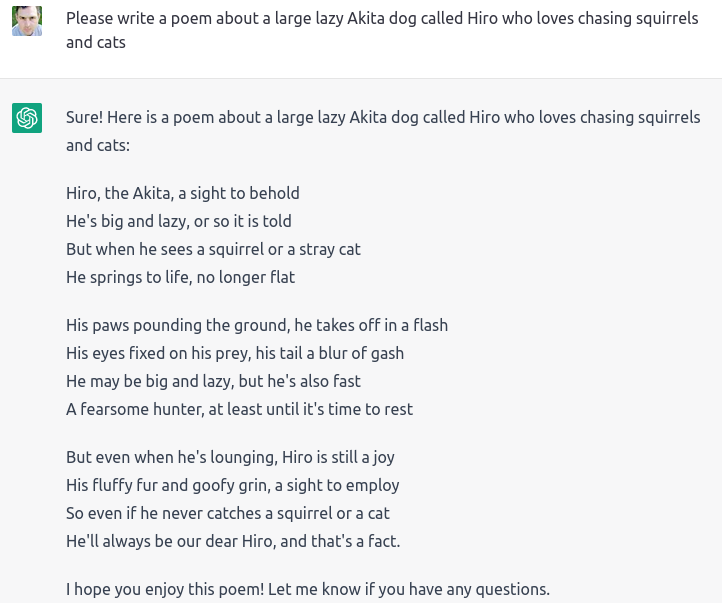

Please write a haiku about why people should try ChatGPT.

Please write a poem about a large lazy Akita dog called Hiro who loves chasing squirrels and cats.

OK, so “tail a blur of gash” rhymes, but…

I won’t cover other creative writing here, this article talks about how some writers are using GPT-based tools to help with writing.

What can it not do?

I was curious what would happen if I asked ChatGPT to generate an image for me:

It’s not going to replace DALL-E or midjourney

Conclusions

Tools like ChatGPT, DALL-E and midjourney can be a lot of fun as well as being incredibly useful. I find it very easy to fall down a rabbit hole playing with these tools. I recently read “Cats in the middle ages: what medieval manuscripts teach us about our ancestors’ pets” and was intrigued by the image titled “a cat cosplaying as a nun”. I wondered what midjourney would make of that and my wife and I lost an hour or so seeing what hilarious images we could generate.

I think the main takeaways are:

- It’s a tool for people to use, not a replacement for people.

- Always verify what information you get back. ChatGPT can “bullshit with confidence”.

Comments powered by Disqus.